1 Recommendations

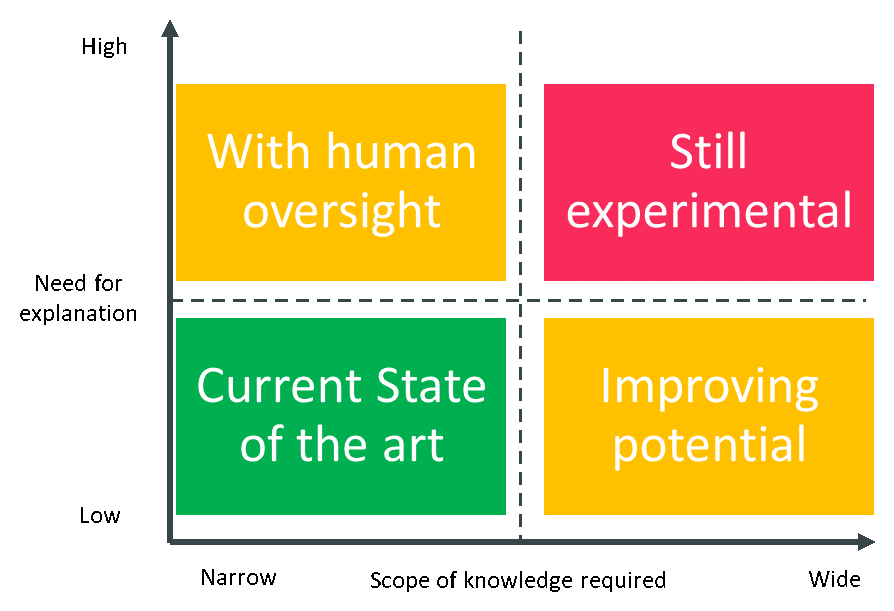

The areas where AI can practically be applied today can be thought of in two dimensions – the scope of knowledge required and the need for explanation. Note that the need for explanation is usually related to the need for legal justification where potential consequences of mistakes are high. This is illustrated in Figure 1.

Organizations are recommended to look for applications that fit into the green area in the diagram and to use caution when considering those that would lie in the amber areas. The red area is still experimental and should only be considered suitable as a research activity.

Today, AI technologies can be applied successfully to the following kinds of problems:

- With narrow fields of knowledge; for example, the analysis cyber security event data.

- Where large amounts of data exist in a form that can be analyzed, and the correct results are known; for example, marketing analytics.

- Where precise cognitive skills are needed repetitively; for example, visual detection of faulty components on productions lines.

- Where there is little or no need for explanation of the conclusions; for example, vehicle route planning.

- Where human oversight can be applied to provide explanations and to mitigate the potential of mistakes made by the system. For example, to the detailed diagnosis of specific forms of illnesses.

There is improving potential for the application of these systems to areas requiring wider knowledge or to apply knowledge acquired in one area to another area and where the need for explanation is low. For example, using the way in which problems are diagnosed in one domain to another domain. For example, from cyber security to fraud.

The application of these technologies to areas that require wide knowledge and where mistakes have the potential to cause significant harm is still experimental.

In addition, take care to consider the ethical aspects. In particular – avoid bias, understand the need for explanation, clarify responsibility and manage any potential economic impacts.